06 X Ds之文件系统订阅

clusters:

- name:

...

eds_cluster_config:

service_name:

eds_config:

path: ... # ConfigSource,支持使用path, api_config_source或ads三者之一;

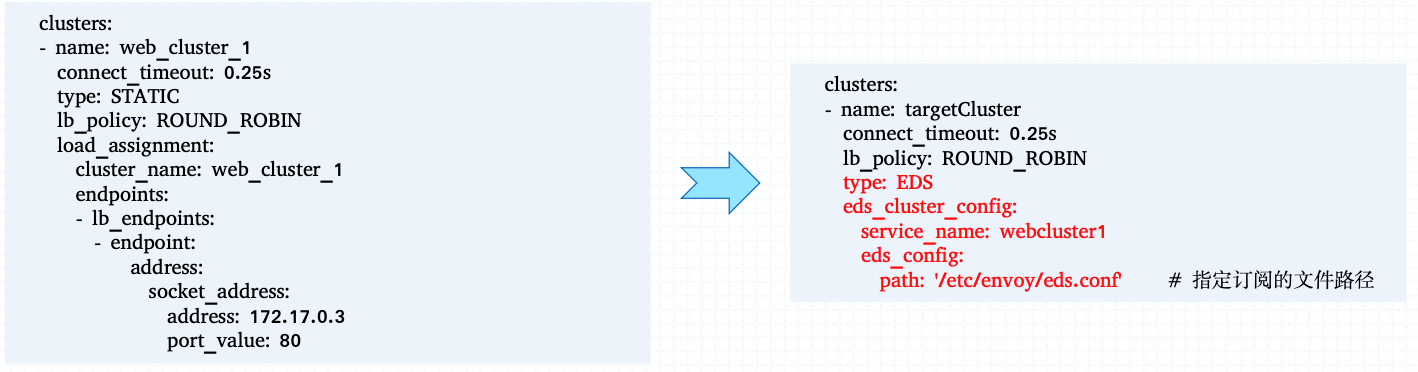

以EDS为例,Cluster为静态定义,其各Endpoint通过EDS动态发现;

下例是将endpoint的定义由静态配置(STATIC)转换为EDS动态发现:

- 准备用到的相关文件。

docker-compose目录下的文件结构:

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# tree .

.

├── docker-compose.yaml

├── Dockerfile-envoy

├── eds.conf

└── envoy.yaml

0 directories, 4 files

准备Dockerfile-envoy文件,定义envoy镜像

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# cat Dockerfile-envoy

FROM envoyproxy/envoy-alpine:v1.11.1

COPY ./eds.conf* ./envoy.yaml /etc/envoy/

RUN apk update && apk --no-cache add curl

准备一个空的eds.conf配置文件,用于之后在该文件上做基于文件系统的订阅

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# cat eds.conf

{}

准备envoy的配置文件(envoy.yaml)

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# cat envoy.yaml

node:

id: envoy_001

cluster: testcluster

admin:

access_log_path: /tmp/admin_access.log

address:

socket_address: { address: 0.0.0.0, port_value: 9901 }

static_resources:

listeners:

- name: listener_http

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

stat_prefix: egress_http

codec_type: AUTO

route_config:

name: test_route

virtual_hosts:

- name: web_service_1

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: webcluster1 }

http_filters:

- name: envoy.router

clusters:

- name: webcluster1

connect_timeout: 0.25s

type: EDS

lb_policy: ROUND_ROBIN

eds_cluster_config:

service_name: webcluster1

eds_config:

path: '/etc/envoy/eds.conf' #基于文件系统的EDS

准备docker-compose文件(docker-compose.yaml)

docker-compose要点:3个容器共享envoymesh网络

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# cat docker-compose.yaml

version: '3.3'

services:

envoy:

build:

context: .

dockerfile: Dockerfile-envoy

networks:

envoymesh:

aliases:

- envoy

expose:

- "80"

ports:

- "8080:80"

webserver1:

image: ikubernetes/mini-http-server:v0.3

networks:

envoymesh:

aliases:

- webserver1

expose:

- "8081"

depends_on:

- "envoy"

webserver2:

image: ikubernetes/mini-http-server:v0.3

networks:

envoymesh:

aliases:

- webserver2

expose:

- "8081"

depends_on:

- "envoy"

networks:

envoymesh: {}

- 启动docker-compose

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# docker-compose up -d

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# docker-compose ps

Name Command State Ports

---------------------------------------------------------------------------------------------------------------------

edsfilesystem_envoy_1 /docker-entrypoint.sh envo ... Up 10000/tcp, 0.0.0.0:8080->80/tcp,:::8080->80/tcp

edsfilesystem_webserver1_1 /bin/httpserver Up 8081/tcp

edsfilesystem_webserver2_1 /bin/httpserver Up 8081/tcp

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# docker exec -it edsfilesystem_webserver1_1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

161: eth0@if162: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:1c:00:04 brd ff:ff:ff:ff:ff:ff

inet 172.28.0.4/16 brd 172.28.255.255 scope global eth0

valid_lft forever preferred_lft forever

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# docker exec -it edsfilesystem_webserver2_1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

159: eth0@if160: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:1c:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.28.0.3/16 brd 172.28.255.255 scope global eth0

valid_lft forever preferred_lft forever

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# docker exec -it edsfilesystem_envoy_1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

157: eth0@if158: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ac:1c:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.28.0.2/16 brd 172.28.255.255 scope global eth0

valid_lft forever preferred_lft forever

此时基于文件系统的eds配置为空,所以webcluster1这个集群的endpoint为空,可以通过管理接口查看:

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# curl 172.28.0.2:9901/clusters

webcluster1::default_priority::max_connections::1024

webcluster1::default_priority::max_pending_requests::1024

webcluster1::default_priority::max_requests::1024

webcluster1::default_priority::max_retries::3

webcluster1::high_priority::max_connections::1024

webcluster1::high_priority::max_pending_requests::1024

webcluster1::high_priority::max_requests::1024

webcluster1::high_priority::max_retries::3

webcluster1::added_via_api::false

- 修改eds.conf文件,加入一个endpoint(172.26.0.3:8081)

#进入到envoy容器,进行下面的操作

root@ubuntu1:~# docker exec -it edsfilesystem_envoy_1 sh

/ # cd /etc/envoy/

/etc/envoy # cat eds.conf

{}

#修改eds.conf内容,加入endpoint:172.24.0.3:8081

/etc/envoy # vi eds.conf

#文件/etc/envoy/eds.conf中以Discovery Response报文的格式给出响应实例。例如,下面的配置示例用于存在地址172.28.0.3某上游服务器可提供服务时,响应报文需要以JSON格式给出:

/etc/envoy # cat eds.conf

{

"version_info": "1",

"resources": [

{

"@type": "type.googleapis.com/envoy.api.v2.ClusterLoadAssignment",

"cluster_name": "webcluster1",

"endpoints": [

{

"lb_endpoints": [

{

"endpoint": {

"address": {

"socket_address": {

"address": "172.24.0.3",

"port_value": 8081

}

}

}

}

]

}

]

}

]

}

此时我们去查看cluster信息:

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# curl 172.28.0.2:9901/clusters

webcluster1::default_priority::max_connections::1024

webcluster1::default_priority::max_pending_requests::1024

webcluster1::default_priority::max_requests::1024

webcluster1::default_priority::max_retries::3

webcluster1::high_priority::max_connections::1024

webcluster1::high_priority::max_pending_requests::1024

webcluster1::high_priority::max_requests::1024

webcluster1::high_priority::max_retries::3

webcluster1::added_via_api::false

发现,新加入的endpoint并没有出现。这是因为envoy在容器中运行,由于docker采用的overlay的文件系统,inotify工作有点问题。需要我们手动触发一下inotify,可以采用rename的方式:

/etc/envoy # mv eds.conf tmp && mv tmp eds.conf

此时再查看cluster的信息:

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# curl 172.28.0.2:9901/clusters

webcluster1::default_priority::max_connections::1024

webcluster1::default_priority::max_pending_requests::1024

webcluster1::default_priority::max_requests::1024

webcluster1::default_priority::max_retries::3

webcluster1::high_priority::max_connections::1024

webcluster1::high_priority::max_pending_requests::1024

webcluster1::high_priority::max_requests::1024

webcluster1::high_priority::max_retries::3

webcluster1::added_via_api::false

webcluster1::172.28.0.3:8081::cx_active::0

webcluster1::172.28.0.3:8081::cx_connect_fail::0

webcluster1::172.28.0.3:8081::cx_total::0

webcluster1::172.28.0.3:8081::rq_active::0

webcluster1::172.28.0.3:8081::rq_error::0

webcluster1::172.28.0.3:8081::rq_success::0

webcluster1::172.28.0.3:8081::rq_timeout::0

webcluster1::172.28.0.3:8081::rq_total::0

webcluster1::172.28.0.3:8081::hostname::

webcluster1::172.28.0.3:8081::health_flags::healthy

webcluster1::172.28.0.3:8081::weight::1

webcluster1::172.28.0.3:8081::region::

webcluster1::172.28.0.3:8081::zone::

webcluster1::172.28.0.3:8081::sub_zone::

webcluster1::172.28.0.3:8081::canary::false

webcluster1::172.28.0.3:8081::priority::0

webcluster1::172.28.0.3:8081::success_rate::-1

webcluster1::172.28.0.3:8081::local_origin_success_rate::-1

此时可以发现,基于文件系统的订阅已经生效,172.26.0.3:8081这个endpoint已经加入到cluster中。

- EDS配置更新:假设此集群新增了地址为172.26.0.4:8081的上游服务器,则将订阅的文件内容修改为如下所示后,直接替换原配置文件eds.conf

/etc/envoy # vi eds.conf

/etc/envoy # cat eds.conf

{

"version_info": "2",

"resources": [

{

"@type": "type.googleapis.com/envoy.api.v2.ClusterLoadAssignment",

"cluster_name": "webcluster1",

"endpoints": [

{

"lb_endpoints": [

{

"endpoint": {

"address": {

"socket_address": {

"address": "172.28.0.3",

"port_value": 8081

}

}

}

},

{

"endpoint": {

"address": {

"socket_address": {

"address": "172.28.0.4",

"port_value": 8081

}

}

}

}

]

}

]

}

]

}

/etc/envoy # mv eds.conf tmp && mv tmp eds.conf

查看cluster的信息:

root@ubuntu1:~/servicemesh_in_practise/eds-filesystem# curl 172.28.0.2:9901/clusters

webcluster1::default_priority::max_connections::1024

webcluster1::default_priority::max_pending_requests::1024

webcluster1::default_priority::max_requests::1024

webcluster1::default_priority::max_retries::3

webcluster1::high_priority::max_connections::1024

webcluster1::high_priority::max_pending_requests::1024

webcluster1::high_priority::max_requests::1024

webcluster1::high_priority::max_retries::3

webcluster1::added_via_api::false

webcluster1::172.28.0.3:8081::cx_active::0

webcluster1::172.28.0.3:8081::cx_connect_fail::0

webcluster1::172.28.0.3:8081::cx_total::0

webcluster1::172.28.0.3:8081::rq_active::0

webcluster1::172.28.0.3:8081::rq_error::0

webcluster1::172.28.0.3:8081::rq_success::0

webcluster1::172.28.0.3:8081::rq_timeout::0

webcluster1::172.28.0.3:8081::rq_total::0

webcluster1::172.28.0.3:8081::hostname::

webcluster1::172.28.0.3:8081::health_flags::healthy

webcluster1::172.28.0.3:8081::weight::1

webcluster1::172.28.0.3:8081::region::

webcluster1::172.28.0.3:8081::zone::

webcluster1::172.28.0.3:8081::sub_zone::

webcluster1::172.28.0.3:8081::canary::false

webcluster1::172.28.0.3:8081::priority::0

webcluster1::172.28.0.3:8081::success_rate::-1

webcluster1::172.28.0.3:8081::local_origin_success_rate::-1

webcluster1::172.28.0.4:8081::cx_active::0

webcluster1::172.28.0.4:8081::cx_connect_fail::0

webcluster1::172.28.0.4:8081::cx_total::0

webcluster1::172.28.0.4:8081::rq_active::0

webcluster1::172.28.0.4:8081::rq_error::0

webcluster1::172.28.0.4:8081::rq_success::0

webcluster1::172.28.0.4:8081::rq_timeout::0

webcluster1::172.28.0.4:8081::rq_total::0

webcluster1::172.28.0.4:8081::hostname::

webcluster1::172.28.0.4:8081::health_flags::healthy

webcluster1::172.28.0.4:8081::weight::1

webcluster1::172.28.0.4:8081::region::

webcluster1::172.28.0.4:8081::zone::

webcluster1::172.28.0.4:8081::sub_zone::

webcluster1::172.28.0.4:8081::canary::false

webcluster1::172.28.0.4:8081::priority::0

webcluster1::172.28.0.4:8081::success_rate::-1

webcluster1::172.28.0.4:8081::local_origin_success_rate::-1

此时,第2个endpoint已经加入到cluster中。

续上例:

- 编辑envoy.yaml,将集群配置从静态配置修改为动态资源

dynamic_resources:

cds_config:

path: "/etc/envoy/cds.conf"

完整的配置文件为:

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat envoy.yaml

node:

id: envoy_001

cluster: testcluster

admin:

access_log_path: /tmp/admin_access.log

address:

socket_address: { address: 0.0.0.0, port_value: 9901 }

static_resources:

listeners:

- name: listener_http

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

stat_prefix: egress_http

codec_type: AUTO

route_config:

name: test_route

virtual_hosts:

- name: web_service_1

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: webcluster1 }

http_filters:

- name: envoy.router

dynamic_resources:

cds_config:

path: /etc/envoy/cds.conf

- 定义cds.conf (/etc/envoy/cds.conf) 配置文件,配置Discovery Response应答配置

#v1版本:CDS采用基于文件系统的动态发现+endpoint静态配置

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat cds.conf

{

"version_info": "1",

"resources": [

{

"@type": "type.googleapis.com/envoy.api.v2.Cluster",

"name": "webcluster1",

"connect_timeout": "0.25s",

"lb_policy": "ROUND_ROBIN",

"type": "STRICT_DNS",

"load_assignment": {

"cluster_name": "webcluster1",

"endpoints": [

{

"lb_endpoints": [

{

"endpoint": {

"address": {

"socket_address": {

"address": "myservice",

"port_value": 8081

}

}

}

}

]

}

]

}

}

]

}

#v2版本:CDS采用基于文件系统的动态发现+EDS采用基于文件系统的动态发现

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat cds.conf.v2

{

"version_info": "1",

"resources": [{

"@type": "type.googleapis.com/envoy.api.v2.Cluster",

"name": "webcluster1",

"connect_timeout": "0.25s",

"lb_policy": "ROUND_ROBIN",

"type": "EDS",

"eds_cluster_config": {

"service_name": "webcluster1",

"eds_config": {

"path": "/etc/envoy/eds.conf"

}

}

}]

}

最终的文件目录结构:

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# tree .

.

├── cds.conf

├── cds.conf.v2

├── docker-compose.yaml

├── Dockerfile-envoy

├── eds.conf

└── envoy.yaml

0 directories, 6 files

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat docker-compose.yaml

version: '3.3'

services:

envoy:

build:

context: .

dockerfile: Dockerfile-envoy

networks:

envoymesh:

aliases:

- envoy

expose:

- "80"

- "9901"

webserver1:

image: ikubernetes/mini-http-server:v0.3

networks:

envoymesh:

aliases:

- webserver1

- myservice

expose:

- "8081"

depends_on:

- "envoy"

webserver2:

image: ikubernetes/mini-http-server:v0.3

networks:

envoymesh:

aliases:

- webserver2

- myservice

expose:

- "8081"

depends_on:

- "envoy"

networks:

envoymesh: {}

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat Dockerfile-envoy

FROM envoyproxy/envoy-alpine:v1.11.1

COPY ./eds.conf ./envoy.yaml ./cds.conf* /etc/envoy/

RUN apk update && apk --no-cache add curl

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat envoy.yaml

node:

id: envoy_001

cluster: testcluster

admin:

access_log_path: /tmp/admin_access.log

address:

socket_address: { address: 0.0.0.0, port_value: 9901 }

static_resources:

listeners:

- name: listener_http

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

stat_prefix: egress_http

codec_type: AUTO

route_config:

name: test_route

virtual_hosts:

- name: web_service_1

domains: ["*"]

routes:

- match: { prefix: "/" }

route: { cluster: webcluster1 }

http_filters:

- name: envoy.router

dynamic_resources:

cds_config:

path: /etc/envoy/cds.conf

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat cds.conf

{

"version_info": "1",

"resources": [

{

"@type": "type.googleapis.com/envoy.api.v2.Cluster",

"name": "webcluster1",

"connect_timeout": "0.25s",

"lb_policy": "ROUND_ROBIN",

"type": "STRICT_DNS",

"load_assignment": {

"cluster_name": "webcluster1",

"endpoints": [

{

"lb_endpoints": [

{

"endpoint": {

"address": {

"socket_address": {

"address": "myservice",

"port_value": 8081

}

}

}

}

]

}

]

}

}

]

}

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat cds.conf.v2

{

"version_info": "1",

"resources": [{

"@type": "type.googleapis.com/envoy.api.v2.Cluster",

"name": "webcluster1",

"connect_timeout": "0.25s",

"lb_policy": "ROUND_ROBIN",

"type": "EDS",

"eds_cluster_config": {

"service_name": "webcluster1",

"eds_config": {

"path": "/etc/envoy/eds.conf"

}

}

}]

}

root@ubuntu1:~/servicemesh_in_practise/cds-eds-filesystem# cat eds.conf

{

"version_info": "2",

"resources": [

{

"@type": "type.googleapis.com/envoy.api.v2.ClusterLoadAssignment",

"cluster_name": "webcluster1",

"endpoints": [

{

"lb_endpoints": [

{

"endpoint": {

"address": {

"socket_address": {

"address": "172.24.0.3",

"port_value": 8081

}

}

}

},

{

"endpoint": {

"address": {

"socket_address": {

"address": "172.24.0.4",

"port_value": 8081

}

}

}

}

]

}

]

}

]

}

测试的方式通示例1,此处不再演示。