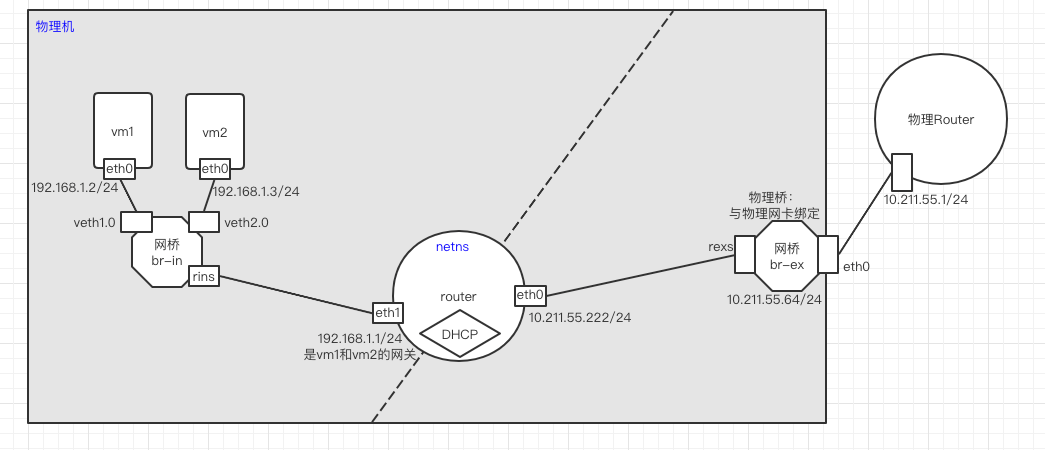

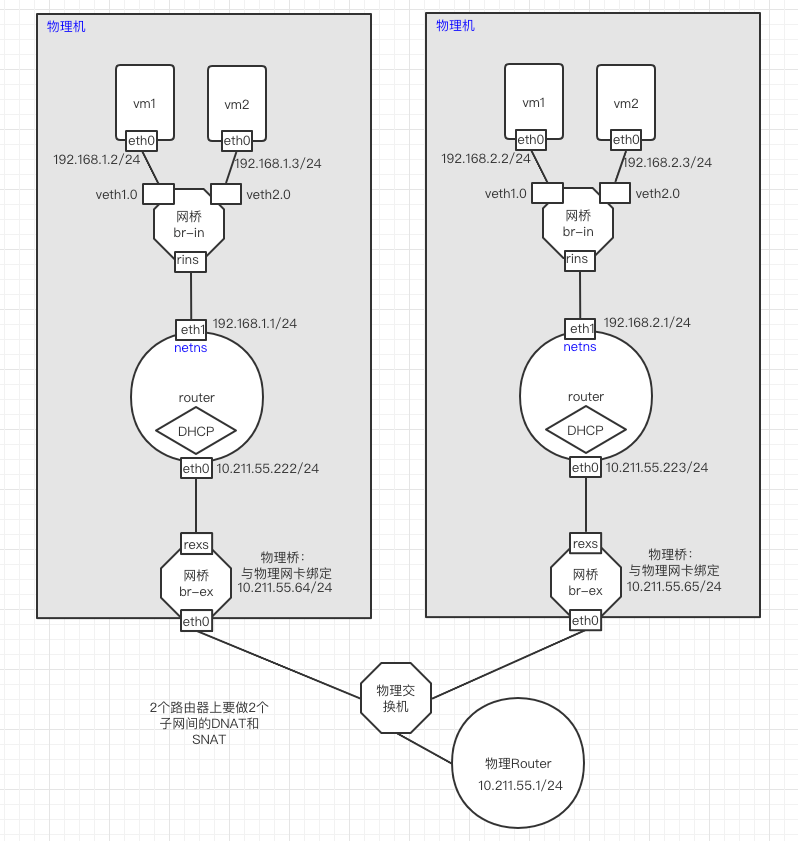

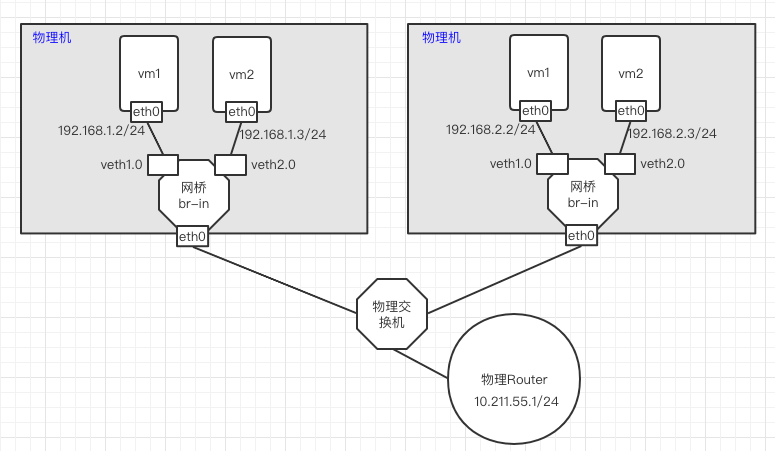

01 Linux Bridge实现单宿主机上多个虚拟机通信

- br-ex是一个物理桥,与物理网卡绑定;采用linux网桥实现。作用是为宿主机内的虚拟机提供网络出口

- br-in是一个虚拟桥,采用linux网桥实现。作用是连接虚拟机,提供虚拟机间通信的二层设备

- 路由器+DHCP:路由器和DHCP服务器都是在netns中实现,在该netns中打开内核转发并配置snat规则;dnsmasq提供dchp功能

- vmX:虚拟机,使用qemu-kvm提供虚拟化。

- veth:veth设备提供设备间连接

1、vm间可以相互访问

2、vm可以访问外网

3、DHCP自动为vm分配IP

4、实现功能的主要设备:brctl网桥+netns

#创建网桥br-ex

[root@OS-network-1 ~]# brctl addbr br-ex

[root@OS-network-1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

inet 10.211.55.64/24 brd 10.211.55.255 scope global noprefixroute dynamic eth0

valid_lft 1568sec preferred_lft 1568sec

inet6 fdb2:2c26:f4e4:0:d3c1:2487:cb61:a77c/64 scope global noprefixroute dynamic

valid_lft 2591609sec preferred_lft 604409sec

inet6 fe80::91e:7192:7cdb:adf9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: br-ex: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether d2:21:f8:2d:6c:8f brd ff:ff:ff:ff:ff:ff

#启动网桥br-ex

[root@OS-network-1 ~]# ip link set br-ex up

[root@OS-network-1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

inet 10.211.55.64/24 brd 10.211.55.255 scope global noprefixroute dynamic eth0

valid_lft 1547sec preferred_lft 1547sec

inet6 fdb2:2c26:f4e4:0:d3c1:2487:cb61:a77c/64 scope global noprefixroute dynamic

valid_lft 2591588sec preferred_lft 604388sec

inet6 fe80::91e:7192:7cdb:adf9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: br-ex: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether d2:21:f8:2d:6c:8f brd ff:ff:ff:ff:ff:ff

inet6 fe80::d021:f8ff:fe2d:6c8f/64 scope link

valid_lft forever preferred_lft forever

# 删除物理网卡的IP,将该IP配置到br-ex上,并将eth0加入到br-ex桥上。

[root@OS-network-1 ~]# ip a del 10.211.55.64/24 dev eth0; ip a add 10.211.55.64/24 dev br-ex; brctl addif br-ex eth0

[root@OS-network-1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br-ex state UP group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

inet6 fdb2:2c26:f4e4:0:d3c1:2487:cb61:a77c/64 scope global noprefixroute dynamic

valid_lft 2591991sec preferred_lft 604791sec

inet6 fe80::91e:7192:7cdb:adf9/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: br-ex: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

inet 10.211.55.64/24 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::d021:f8ff:fe2d:6c8f/64 scope link

valid_lft forever preferred_lft forever

# 为br-ex设备配置默认路由

[root@OS-network-1 ~]# ip r add default via 10.211.55.1 dev br-ex

# 创建内部桥br-in

[root@OS-network-1 ~]# brctl addbr br-in

[root@OS-network-1 ~]# ip link set br-in up

[root@OS-network-1 ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br-ex state UP mode DEFAULT group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

3: br-ex: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

4: br-in: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether f2:7f:19:80:f2:01 brd ff:ff:ff:ff:ff:ff

[root@OS-network-1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.001c426cb4ed no eth0

br-in 8000.000000000000 no

路由功能使用netns实现,netns内部无法独自开启内核转发ip_forward,需要在宿主机上开启,netns内部共享宿主机的内核转发。

什么是ip_forward内核转发?

出于安全考虑,Linux系统默认是禁止数据包转发的。

所谓转发即当主机拥有多于一块的网卡时,其中一块收到数据包,根据数据包的目的ip地址将包发往本机另一网卡,该网卡根据路由表继续发送数据包。这通常就是路由器所要实现的功能。

# 启用内核转发ip_forward

[root@OS-network-1 ~]# vi /etc/sysctl.conf

[root@OS-network-1 ~]# cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

net.ipv4.ip_forward = 1

# 使参数生效

[root@OS-network-1 ~]# sysctl -p

net.ipv4.ip_forward = 1

# 创建netns,名称为router

[root@OS-network-1 ~]# ip netns add router

[root@OS-network-1 ~]# ip netns show

router

# 创建netns名称空间router

[root@OS-network-1 ~]# ip netns add router

[root@OS-network-1 ~]# ip netns show

router

# 创建veth设备

[root@OS-network-1 ~]# ip link add rexr type veth peer name rexs

# 将veth的一端加入到br-ex桥,并启动

[root@OS-network-1 ~]# brctl addif br-ex rexs

[root@OS-network-1 ~]# ip link set rexs up

[root@OS-network-1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.001c426cb4ed no eth0

rexs

br-in 8000.000000000000 no

# 将veth的另一端加入到router名称空间

[root@OS-network-1 ~]# ip link set rexr netns router

# 将router名称空间中的rexr网卡,改名为eht0(可选)

[root@OS-network-1 ~]# ip netns exec router ip link set rexr name eth0

# 配置IP并启动

[root@OS-network-1 ~]# ip netns exec router ip link set eth0 up #对于加入netns中的接口设备,即使加入之前处于up状态,加入到netns后也会处于down状态,需要再次启动。

[root@OS-network-1 ~]# ip netns exec router ip a add 10.211.55.222/24 dev eth0

[root@OS-network-1 ~]# ip netns exec router ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.211.55.222/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fdb2:2c26:f4e4:0:c499:75ff:fe7a:d8bc/64 scope global mngtmpaddr dynamic

valid_lft 2591990sec preferred_lft 604790sec

inet6 fe80::c499:75ff:fe7a:d8bc/64 scope link

valid_lft forever preferred_lft forever

#测试:ping物理网关

[root@OS-network-1 ~]# ip netns exec router ping 10.211.55.1 -c1

PING 10.211.55.1 (10.211.55.1) 56(84) bytes of data.

64 bytes from 10.211.55.1: icmp_seq=1 ttl=128 time=0.163 ms

--- 10.211.55.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.163/0.163/0.163/0.000 ms

[root@OS-network-1 ~]# ip link add rinr type veth peer name rins

[root@OS-network-1 ~]# ip link set rins up

[root@OS-network-1 ~]# brctl addif br-in rins

[root@OS-network-1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.001c426cb4ed no eth0

rexs

br-in 8000.928eba1faa9e no rins

[root@OS-network-1 ~]# ip link set rinr netns router

[root@OS-network-1 ~]# ip netns exec router ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: rinr@if7: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 52:75:ef:6f:34:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@OS-network-1 ~]# ip netns exec router ip link set rinr name eth1 #改名,可选

[root@OS-network-1 ~]# ip netns exec router ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.211.55.222/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fdb2:2c26:f4e4:0:c499:75ff:fe7a:d8bc/64 scope global mngtmpaddr dynamic

valid_lft 2591488sec preferred_lft 604288sec

inet6 fe80::c499:75ff:fe7a:d8bc/64 scope link

valid_lft forever preferred_lft forever

8: eth1@if7: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 52:75:ef:6f:34:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@OS-network-1 ~]# ip netns exec router ip link set eth1 up

[root@OS-network-1 ~]# ip netns exec router ip a add 192.168.1.1/24 dev eth1

[root@OS-network-1 ~]# ip netns exec router ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.211.55.222/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fdb2:2c26:f4e4:0:c499:75ff:fe7a:d8bc/64 scope global mngtmpaddr dynamic

valid_lft 2591945sec preferred_lft 604745sec

inet6 fe80::c499:75ff:fe7a:d8bc/64 scope link

valid_lft forever preferred_lft forever

8: eth1@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:75:ef:6f:34:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.1/4 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::5075:efff:fe6f:3488/64 scope link

valid_lft forever preferred_lft forever

vm1采用收到配置IP的方式,使用qemu-kvm创建 。

安装qemu-kvm、准备磁盘镜像、网卡配置脚本

[root@OS-network-1 ~]# yum install -y qemu-kvm

[root@OS-network-1 ~]# ln -sv /usr/libexec/qemu-kvm /usr/bin/

'/usr/bin/qemu-kvm' -> '/usr/libexec/qemu-kvm'

[root@OS-network-1 ~]# mkdir -pv /images/cirros

[root@OS-network-1 ~]# cd /images/cirros/

[root@OS-network-1 cirros]# rz -be

rz waiting to receive.**B0100000063f694

[root@OS-network-1 cirros]# # Received /Users/lijuzhang/Downloads/cirros-0.4.0-x86_64-disk.img

[root@OS-network-1 cirros]# ls

cirros-0.4.0-x86_64-disk.img

[root@OS-network-1 cirros]# cp cirros-0.4.0-x86_64-disk.img test1.qcow2

[root@OS-network-1 cirros]# cp cirros-0.4.0-x86_64-disk.img test2.qcow2

# 准备网卡脚本

[root@OS-network-1 cirros]# vi /etc/qemu-ifup

[root@OS-network-1 cirros]# bash -n /etc/qemu-ifup

[root@OS-network-1 cirros]# cat /etc/qemu-ifup

#!/bin/bash

bridge=br-in

if [ -n "$1" ];then

ip link set $1 up

brctl addif $bridge $1 && exit 0 || exit 1

else

echo "Error: no interface specified"

exit 2

fi

[root@OS-network-1 cirros]# chmod +x /etc/qemu-ifup

# 使用qemu-kvm创建虚拟机,并将虚拟机的网卡一端插入到虚拟机中,一端插入到br-in网桥中

# --nographic:表示不启用VNCserver,直接将控制台运行在前台

# -daemonize:如果要启用vnc,可以使用-daemonize后台运行

[root@OS-network-1 cirros]# qemu-kvm -m 128 -smp 1 -name vm1 -drive file=/images/cirros/test1.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:cc -net tap,ifname=veth1.0,script=/etc/qemu-ifup --nographic

# 发现虚拟机的网卡加入到网桥了

[root@OS-network-1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.001c426cb4ed no eth0

rexs

br-in 8000.928eba1faa9e no rins

veth1.0

############ debug end ##############

____ ____ ____

/ __/ __ ____ ____ / __ \/ __/

/ /__ / // __// __// /_/ /\ \

\___//_//_/ /_/ \____/___/

http://cirros-cloud.net

login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

cirros login: cirros

Password:

$ sudo -i

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:cc brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:feaa:bbcc/64 scope link

valid_lft forever preferred_lft forever

# ip a add 192.168.1.2/24 dev eth0

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:cc brd ff:ff:ff:ff:ff:ff

inet 192.168.1.2/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feaa:bbcc/64 scope link

valid_lft forever preferred_lft forever

# #ping网关

# ping 192.168.1.1 -c1

PING 192.168.1.1 (192.168.1.1): 56 data bytes

64 bytes from 192.168.1.1: seq=0 ttl=64 time=1.793 ms

--- 192.168.1.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.793/1.793/1.793 ms

# #配置路由(网关)

# ip route add default via 192.168.1.1

# ip r

default via 192.168.1.1 dev eth0

192.168.1.0/24 dev eth0 src 192.168.1.2

# ping -c1 10.211.55.222 # ping通

PING 10.211.55.222 (10.211.55.222): 56 data bytes

64 bytes from 10.211.55.222: seq=0 ttl=64 time=0.597 ms

--- 10.211.55.222 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.597/0.597/0.597 ms

#

# ping -c1 10.211.55.1 # ping不通

PING 10.211.55.1 (10.211.55.1): 56 data bytes

^C

--- 10.211.55.1 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

# ping -c1 10.211.55.64 # ping不通

PING 10.211.55.64 (10.211.55.64): 56 data bytes

^C

--- 10.211.55.64 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

我们发现,vm1虚拟机ping不通br-ex和物理网关,这是因为ping报文能够发出到物理网关,但是物理网关设备的没有将网关设置为router,导致报文无法返回。除了对物理网关设备进行路由配置外,一般的做法是对router做SNAT转发。

[root@OS-network-1 ~]# ip netns exec router iptables -t nat -A POSTROUTING -s 192.168.1.0/24 ! -d 192.168.1.0/24 -j SNAT --to-source 10.211.55.222

[root@OS-network-1 ~]# ip netns exec router iptables -vnL -t nat

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 SNAT all -- * * 192.168.1.0/24 !192.168.1.0/24 to:10.211.55.222

测试vm1ping物理网关

# 能够ping通了

# ping -c1 10.211.55.1

PING 10.211.55.1 (10.211.55.1): 56 data bytes

64 bytes from 10.211.55.1: seq=0 ttl=127 time=1.641 ms

--- 10.211.55.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.641/1.641/1.641 ms

测试vm1ping公网114.114.114.114

# ping 114.114.114.114 -c1

PING 114.114.114.114 (114.114.114.114): 56 data bytes

--- 114.114.114.114 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

#无法ping通

抓包分析,报文流向:veth1.0 –> rins –> router/eth1 –> router/eth0 –> rexs –> eth0 –> 10.211.55.1 –> 114.114.114.114,在这些网卡设备上一次抓包:

...其他设备上的抓包步骤省略...

#在router名称空间下的eth1网卡抓包,发现有出去的报文,没有回来的报文

[root@OS-network-1 ~]# ip netns exec router tcpdump -nn -v -i eth1 icmp

tcpdump: listening on eth1, link-type EN10MB (Ethernet), capture size 262144 bytes

12:34:17.757312 IP (tos 0x0, ttl 64, id 58602, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.1.2 > 114.114.114.114: ICMP echo request, id 49921, seq 3257, length 64

12:34:18.802413 IP (tos 0x0, ttl 64, id 58829, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.1.2 > 114.114.114.114: ICMP echo request, id 49921, seq 3258, length 64

12:34:19.848645 IP (tos 0x0, ttl 64, id 59055, offset 0, flags [DF], proto ICMP (1), length 84)

192.168.1.2 > 114.114.114.114: ICMP echo request, id 49921, seq 3259, length 64

^C

3 packets captured

# 在router名称空间下的eth0网卡抓包,没有发出去的报文,所以问题实现在router路由器上

[root@OS-network-1 ~]# ip netns exec router tcpdump -nn -v -i eth0 icmp

tcpdump: listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

在router路由器上配置默认网关:

# 查看路由表,发现没有默认网关,也没有到达114.114.114.114的路由

[root@OS-network-1 ~]# ip netns exec router ip r

10.211.55.0/24 dev eth0 proto kernel scope link src 10.211.55.222

192.0.0.0/4 dev eth1 proto kernel scope link src 192.168.1.1

# 配置默认网关

[root@OS-network-1 ~]# ip netns exec router ip r add default via 10.211.55.1 dev eth0

[root@OS-network-1 ~]# ip netns exec router ip r

default via 10.211.55.1 dev eth0

10.211.55.0/24 dev eth0 proto kernel scope link src 10.211.55.222

192.0.0.0/4 dev eth1 proto kernel scope link src 192.168.1.1

测试vm1ping公网114.114.114.114

# 发现:可以ping通

# ping 114.114.114.114 -c1

PING 114.114.114.114 (114.114.114.114): 56 data bytes

64 bytes from 114.114.114.114: seq=0 ttl=127 time=1.946 ms

--- 114.114.114.114 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.946/1.946/1.946 ms

DHCP功能有dnsmasq服务提供,部署在netns名称空间中。

[root@OS-network-1 ~]# yum install -y dnsmasq

# 宿主机修改dnsmasq的配置文件

[root@OS-network-1 ~]# cat > /etc/dnsmasq.d/netns.conf <<EOF

#DNS服务器的地址,就是我的热点的地址

listen-address=192.168.1.1

#设置DHCP分配的地址范围和时间

dhcp-range=192.168.1.10,192.168.1.200,1h

#设定网关的地址:dnsmasq具体的option,可以通过dnsmasq --help dhcp查看

dhcp-option=3,192.168.1.1

#设定DNS服务器

dhcp-option=option:dns-server,114.114.114.114,8.8.4.4

EOF

#启动dnsmasq

[root@OS-network-1 ~]# ip netns exec router dnsmasq --conf-file=/etc/dnsmasq.d/netns.conf

vm1中使用udchpc客户端手动测试

# udhcpc -R

udhcpc (v1.23.2) started

WARN: '/usr/share/udhcpc/default.script' should not be used in cirros. Replaced by cirros-dhcpc.

Sending discover...

Sending discover...

Sending select for 192.168.1.10...

Lease of 192.168.1.10 obtained, lease time 3600 #分配地址192.168.1.10

WARN: '/usr/share/udhcpc/default.script' should not be used in cirros. Replaced by cirros-dhcpc.

vm2采用DHCP分配IP

[root@OS-network-1 cirros]# qemu-kvm -m 128 -smp 1 -name vm2 -drive file=/images/cirros/test2.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:dd -net tap,ifname=veth2.0,script=/etc/qemu-ifup --nographic

......

=== datasource: None None ===

=== cirros: current=0.4.0 uptime=136.33 ===

____ ____ ____

/ __/ __ ____ ____ / __ \/ __/

/ /__ / // __// __// /_/ /\ \

\___//_//_/ /_/ \____/___/

http://cirros-cloud.net

login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

cirros login: cirros

Password:

$ sudo -i

# 查看ip地址已经分配

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:dd brd ff:ff:ff:ff:ff:ff

inet 192.168.1.185/4 brd 207.255.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feaa:bbdd/64 scope link

valid_lft forever preferred_lft forever

#查看默认网关已经配置

# ip r

default via 192.168.1.1 dev eth0

192.0.0.0/4 dev eth0 src 192.168.1.185

# 访问外网

# ping 114.114.114.114 -c1

PING 114.114.114.114 (114.114.114.114): 56 data bytes

64 bytes from 114.114.114.114: seq=0 ttl=127 time=2.190 ms

--- 114.114.114.114 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 2.190/2.190/2.190 ms

#访问物理网关

# ping 10.211.55.1 -c1

PING 10.211.55.1 (10.211.55.1): 56 data bytes

64 bytes from 10.211.55.1: seq=0 ttl=127 time=0.709 ms

--- 10.211.55.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.709/0.709/0.709 ms

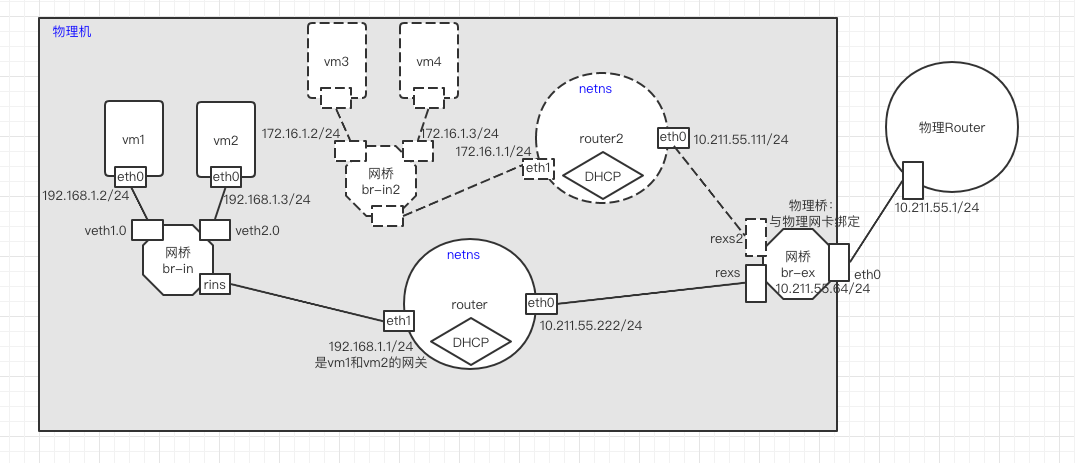

# 创建内部桥br-in

[root@OS-network-1 ~]# brctl addbr br-in2

[root@OS-network-1 ~]# ip link set br-in2 up

[root@OS-network-1 ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br-ex state UP mode DEFAULT group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

3: br-ex: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

4: br-in: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether f2:7f:19:80:f2:01 brd ff:ff:ff:ff:ff:ff

[root@OS-network-1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.001c426cb4ed no eth0

br-in 8000.000000000000 no

[root@OS-network-1 ~]# ip link add rinr2 type veth peer name rins2

[root@OS-network-1 ~]# ip link set rins2 up

[root@OS-network-1 ~]# brctl addif br-in2 rins2

[root@OS-network-1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.001c426cb4ed no eth0

rexs

br-in 8000.928eba1faa9e no rins

veth1.0

br-in2 8000.f69052253160 no rins2

[root@OS-network-1 ~]# ip link set rinr2 netns router

[root@OS-network-1 ~]# ip netns exec router ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: eth1@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 52:75:ef:6f:34:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

16: rinr2@if15: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 0a:54:e5:dd:f8:69 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@OS-network-1 ~]# ip netns exec router ip link set rinr2 name eth3 #改名,可选

[root@OS-network-1 ~]# ip netns exec router ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.211.55.222/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fdb2:2c26:f4e4:0:c499:75ff:fe7a:d8bc/64 scope global mngtmpaddr dynamic

valid_lft 2591807sec preferred_lft 604607sec

inet6 fe80::c499:75ff:fe7a:d8bc/64 scope link

valid_lft forever preferred_lft forever

8: eth1@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:75:ef:6f:34:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.1/4 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::5075:efff:fe6f:3488/64 scope link

valid_lft forever preferred_lft forever

16: eth3@if15: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 0a:54:e5:dd:f8:69 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@OS-network-1 ~]# ip netns exec router ip link set eth3 up

[root@OS-network-1 ~]# ip netns exec router ip a add 172.16.1.1/16 dev eth3

[root@OS-network-1 ~]# ip netns exec router ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c6:99:75:7a:d8:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.211.55.222/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fdb2:2c26:f4e4:0:c499:75ff:fe7a:d8bc/64 scope global mngtmpaddr dynamic

valid_lft 2591945sec preferred_lft 604745sec

inet6 fe80::c499:75ff:fe7a:d8bc/64 scope link

valid_lft forever preferred_lft forever

8: eth1@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:75:ef:6f:34:88 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.1/4 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::5075:efff:fe6f:3488/64 scope link

valid_lft forever preferred_lft forever

ip netns add dhcp2

ip link add rin2d type veth peer name rin2s

ip link set rin2s up

brctl addif br-in2 rin2s

ip link set rin2d netns dhcp2

ip netns exec dhcp2 ip link set rin2d name eth0

ip netns exec dhcp2 ip link set eth0 up

ip netns exec dhcp2 ip a add 172.1.1.2/16 dev eth0

# 宿主机修改dnsmasq的配置文件

[root@OS-network-1 ~]# cat > /etc/dnsmasq.d/dhcp2.conf <<EOF

#DNS服务器的地址,就是我的热点的地址

listen-address=172.16.1.2

#设置DHCP分配的地址范围和时间

dhcp-range=172.16.1.10,172.16.20.200,1h

#设定网关的地址:dnsmasq具体的option,可以通过dnsmasq --help dhcp查看

dhcp-option=3,172.16.1.1

#设定DNS服务器

dhcp-option=option:dns-server,114.114.114.114,8.8.4.4

EOF

#启动dnsmasq

[root@OS-network-1 ~]# ip netns exec dhcp2 dnsmasq --conf-file=/etc/dnsmasq.d/dhcp2.conf

qemu-kvm -m 128 -smp 1 -name vm3 -drive file=/images/cirros/test3.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:00 -net tap,ifname=veth3.0,script=/etc/qemu-ifup172 --nographic