02 Ovs桥与 VLAN

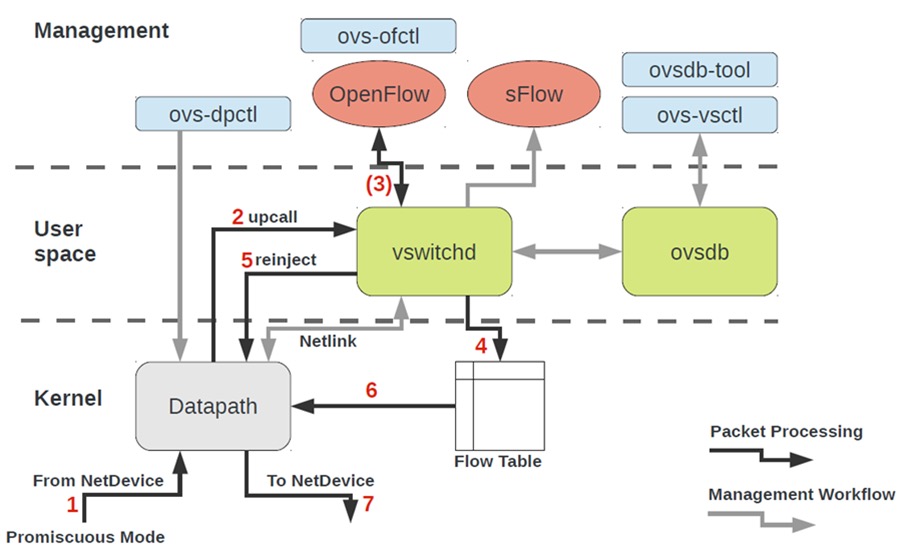

ovs-vswitchd:OVS守护进程,OVS的核心部件,实现交换功能,和Linux内核兼容模块一起,实现基于流的交换(flow-based switching)。它和上层 controller 通信遵从 OPENFLOW 协议,它与 ovsdb-server 通信使用 OVSDB 协议,它和内核模块通过netlink通信,它支持多个独立的 datapath(网桥),它通过更改flow table 实现了绑定和VLAN等功能。

ovsdb-server:轻量级的数据库服务,主要保存了整个OVS 的配置信息,包括接口啊,交换内容,VLAN啊等等。ovs-vswitchd 会根据数据库中的配置信息工作。它于 manager 和 ovs-vswitchd 交换信息使用了OVSDB(JSON-RPC)的方式。

ovs-dpctl:一个工具,用来配置交换机内核模块,可以控制转发规则。

ovs-vsctl:主要是获取或者更改ovs-vswitchd 的配置信息,此工具操作的时候会更新ovsdb-server 中的数据库。

ovs-appctl:主要是向OVS 守护进程发送命令的,一般用不上。

ovsdbmonitor:GUI 工具来显示ovsdb-server 中数据信息。

ovs-controller:一个简单的OpenFlow 控制器

ovs-ofctl:用来控制OVS 作为OpenFlow 交换机工作时候的流表内容。

参考资料:

https://blog.csdn.net/tantexian/article/details/46707175(https://max.book118.com/html/2018/0502/164325096.shtm)

cat > /etc/yum.repos.d/openstack-rocky.repo <<EOF

[openstack]

name=opentack

baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-rocky/

gpgcheck=0

[Virt]

name=CentOS-$releasever - Base

baseurl=https://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

EOF

[root@OS-network-1 ~]# yum install -y openvswitch

[root@OS-network-1 ~]# ovs-vsctl -V

ovs-vsctl (Open vSwitch) 2.11.0

DB Schema 7.16.1

[root@OS-network-1 ~]# systemctl start openvswitch

port与interface的区别:

默认情况下,一个port与一个interface对应。在bond中,一个bond被认为是一个单一的端口,一个绑定由多个接口组成;此外,port可以理解为是物理口,而interface是逻辑口。

ovs-vsctl show # 查看ovsdb中的配置内容

Bridge commands:

add-br BRIDGE create a new bridge named BRIDGE

add-br BRIDGE PARENT VLAN create new fake BRIDGE in PARENT on VLAN

del-br BRIDGE delete BRIDGE and all of its ports

list-br print the names of all the bridges

Port commands (a bond is considered to be a single port):

list-ports BRIDGE print the names of all the ports on BRIDGE

add-port BRIDGE PORT add network device PORT to BRIDGE

add-bond BRIDGE PORT IFACE... add bonded port PORT in BRIDGE from IFACES

del-port [BRIDGE] PORT delete PORT (which may be bonded) from BRIDGE

Interface commands (a bond consists of multiple interfaces):

list-ifaces BRIDGE print the names of all interfaces on BRIDGE

iface-to-br IFACE print name of bridge that contains IFACE

Database commands:

list TBL [REC] 查看TBL表中所有记录,可以查询的表和查询条件字段可以通过-h参数查看

find TBL CONDITION... list records satisfying CONDITION in TBL

get TBL REC COL[:KEY] print values of COLumns in RECord in TBL

set TBL REC COL[:KEY]=VALUE 设置TBL上的REC记录的COL字段值

add TBL REC COL [KEY=]VALUE add (KEY=)VALUE to COLumn in RECord in TBL

remove TBL REC COL [KEY=]VALUE remove (KEY=)VALUE from COLumn

clear TBL REC COL clear values from COLumn in RECord in TBL 清空COL上的值

create TBL COL[:KEY]=VALUE create and initialize new record

destroy TBL REC delete RECord from TBL

wait-until TBL REC [COL[:KEY]=VALUE] wait until condition is true

[root@OS-network-1 etc]# cat >/etc/qemu-ovs-ifup<<"EOF"

#!/bin/bash

bridge=br-in

if [ -n "$1" ];then

ip link set $1 up

sleep 1

ovs-vsctl add-port $bridge $1 && exit 0 || exit 1

brctl addif $bridge $1 && exit 0 || exit 1

else

echo "Error: no port specified"

exit 2

fi

EOF

[root@OS-network-1 etc]# chmod +x /etc/qemu-ovs-ifup

[root@OS-network-1 etc]# cat /etc/qemu-ovs-ifdown

#!/bin/bash

bridge=br-in

if [ -n "$1" ];then

ip link set $1 down

sleep 1

ovs-vsctl del-port $bridge $1 && exit 0 || exit 1

else

echo "Error: no port specified"

exit 2

fi

[root@OS-network-1 etc]# chmod +x /etc/qemu-ovs-ifdown

[root@OS-network-1 ~]# ovs-vsctl add-br br-in

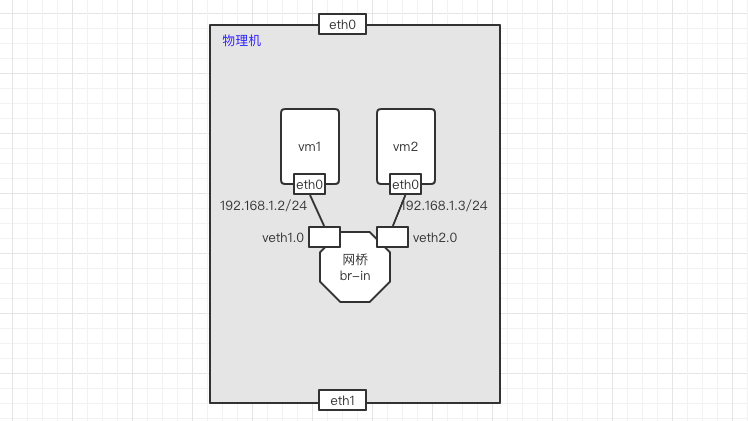

# 创建第1个虚拟机:vm1

[root@OS-network-1 ~]# qemu-kvm -m 128 -smp 1 -name vm1 -drive file=/images/cirros/test1.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:01 -net tap,ifname=veth1.0,script=/etc/qemu-ovs-ifup,downscript=/etc/qemu-ovs-ifdown -nographic

# 发现宿主机已经生成了veth1.0接口

[root@OS-network-1 ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:1c:42:6c:b4:ed brd ff:ff:ff:ff:ff:ff

3: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 9a:50:f1:8a:74:6e brd ff:ff:ff:ff:ff:ff

4: br-in: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 76:f7:89:6b:50:46 brd ff:ff:ff:ff:ff:ff

7: veth1.0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master ovs-system state UNKNOWN mode DEFAULT group default qlen 1000

link/ether fa:c5:d3:86:83:6f brd ff:ff:ff:ff:ff:ff

# 发现veth1.0接口已经插入到网桥br-in中

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge br-in

Port "veth1.0"

Interface "veth1.0"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

# 创建第2个虚拟机:vm2

[root@OS-network-1 ~]# qemu-kvm -m 128 -smp 1 -name vm2 -drive file=/images/cirros/test2.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:02 -net tap,ifname=veth2.0,script=/etc/qemu-ovs-ifup,downscript=/etc/qemu-ovs-ifdown -nographic

# 发现,此时veth1.0和veth2.0都已经接入到网桥br-in中,此时,如果2个虚拟机有ip,是可以ping通的

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge br-in

Port "veth1.0"

Interface "veth1.0"

Port "veth2.0"

Interface "veth2.0"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

# 为vm1配置IP

# ip a add 192.168.1.2/24 dev eth0

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.2/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feaa:bb01/64 scope link

valid_lft forever preferred_lft forever

# 为vm2配置IP

# ip a add 192.168.1.3/24 dev eth0

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:02 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.3/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feaa:bb02/64 scope link

valid_lft forever preferred_lft forever

# 测试vm1去ping vm2

# ping 192.168.1.3 -c1

PING 192.168.1.3 (192.168.1.3): 56 data bytes

64 bytes from 192.168.1.3: seq=0 ttl=64 time=1.199 ms

--- 192.168.1.3 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.199/1.199/1.199 ms

# 测试vm2去ping vm1

# ping 192.168.1.2 -c1

PING 192.168.1.2 (192.168.1.2): 56 data bytes

64 bytes from 192.168.1.2: seq=0 ttl=64 time=1.631 ms

--- 192.168.1.2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 1.631/1.631/1.631 ms

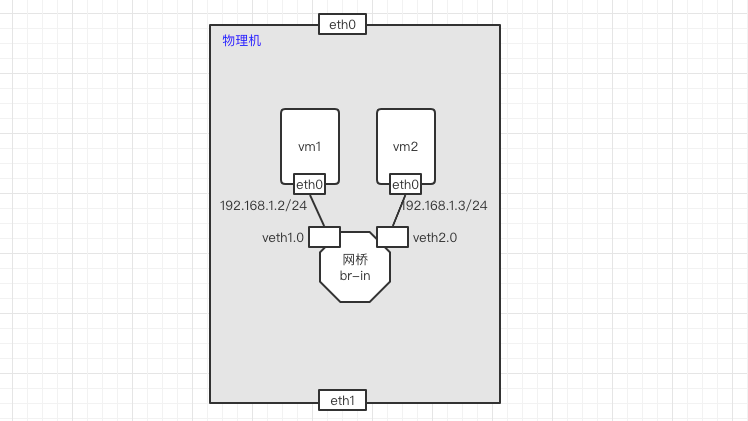

基于试验1

[root@OS-network-1 ~]# ovs-vsctl list port

_uuid : 12ba7374-1323-4ecb-88a4-1974b7324818

bond_active_slave : []

bond_downdelay : 0

bond_fake_iface : false

bond_mode : []

bond_updelay : 0

cvlans : []

external_ids : {}

fake_bridge : false

interfaces : [95c0374d-e9c7-4013-b494-758c06814f5d]

lacp : []

mac : []

name : "veth1.0"

other_config : {}

protected : false

qos : []

rstp_statistics : {}

rstp_status : {}

statistics : {}

status : {}

tag : []

trunks : []

vlan_mode : []

_uuid : c3ca1758-444b-440e-b697-44770162d02d

bond_active_slave : []

bond_downdelay : 0

bond_fake_iface : false

bond_mode : []

bond_updelay : 0

cvlans : []

external_ids : {}

fake_bridge : false

interfaces : [0d89e25c-e783-48db-8fec-c8b5dc108466]

lacp : []

mac : []

name : br-in

other_config : {}

protected : false

qos : []

rstp_statistics : {}

rstp_status : {}

statistics : {}

status : {}

tag : [] #此处tag为空

trunks : []

vlan_mode : []

_uuid : 3a281519-8fb7-4553-aa0b-701e46a29a91

bond_active_slave : []

bond_downdelay : 0

bond_fake_iface : false

bond_mode : []

bond_updelay : 0

cvlans : []

external_ids : {}

fake_bridge : false

interfaces : [ce9624ef-906c-4c07-b708-9cda21f0698d]

lacp : []

mac : []

name : "veth2.0"

other_config : {}

protected : false

qos : []

rstp_statistics : {}

rstp_status : {}

statistics : {}

status : {}

tag : [] #此处tag为空

trunks : []

vlan_mode : []

#发先eth1.0和eth2.0的tag都为空,我们先把eth1.0的port打上10的tag,看是否还可以ping通

[root@OS-network-1 ~]# ovs-vsctl set port veth1.0 tag=10

[root@OS-network-1 ~]# ovs-vsctl list port veth1.0

_uuid : 12ba7374-1323-4ecb-88a4-1974b7324818

bond_active_slave : []

bond_downdelay : 0

bond_fake_iface : false

bond_mode : []

bond_updelay : 0

cvlans : []

external_ids : {}

fake_bridge : false

interfaces : [95c0374d-e9c7-4013-b494-758c06814f5d]

lacp : []

mac : []

name : "veth1.0"

other_config : {}

protected : false

qos : []

rstp_statistics : {}

rstp_status : {}

statistics : {}

status : {}

tag : 10 #此处tag的VLAN ID为10

trunks : []

vlan_mode : []

# 发现vm1 ping不通vm2

# ping 192.168.1.3 -c1

PING 192.168.1.3 (192.168.1.3): 56 data bytes

--- 192.168.1.3 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

# 我们再把eth2.0的port打上10的tag,看是否还可以ping通

[root@OS-network-1 ~]# ovs-vsctl set port veth2.0 tag=10

[root@OS-network-1 ~]# ovs-vsctl list port veth2.0

_uuid : 3a281519-8fb7-4553-aa0b-701e46a29a91

bond_active_slave : []

bond_downdelay : 0

bond_fake_iface : false

bond_mode : []

bond_updelay : 0

cvlans : []

external_ids : {}

fake_bridge : false

interfaces : [ce9624ef-906c-4c07-b708-9cda21f0698d]

lacp : []

mac : []

name : "veth2.0"

other_config : {}

protected : false

qos : []

rstp_statistics : {}

rstp_status : {}

statistics : {}

status : {}

tag : 10

trunks : []

vlan_mode : []

# 发现此时可以ping通

# ping 192.168.1.3 -c1

PING 192.168.1.3 (192.168.1.3): 56 data bytes

64 bytes from 192.168.1.3: seq=0 ttl=64 time=4.420 ms

--- 192.168.1.3 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 4.420/4.420/4.420 ms

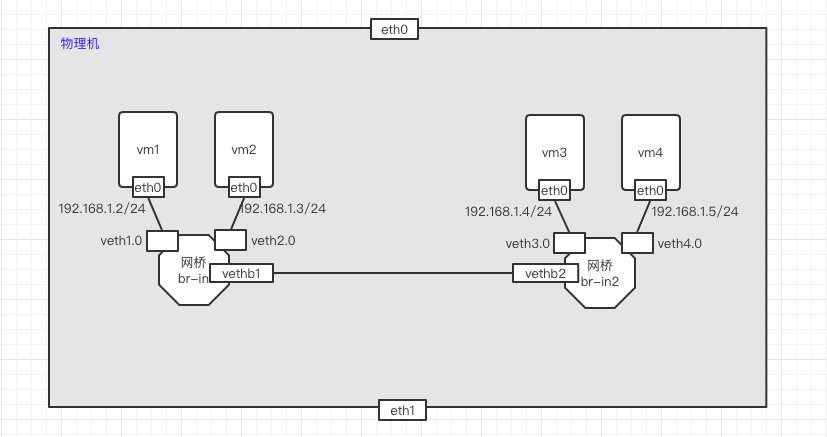

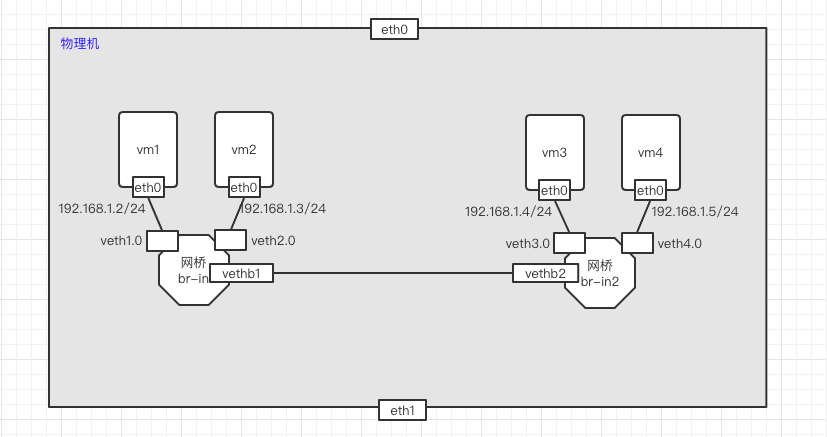

试验目标:将上图中的4个vm都设置为VLAN=10。

创建ovs桥br-in2,并使用veth设备连接br-in1和br-in2:

#创建br-in2桥

[root@OS-network-1 ~]# ovs-vsctl add-br br-in2

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge "br-in2"

Port "br-in2"

Interface "br-in2"

type: internal

Bridge br-in

Port "veth1.0"

tag: 10

Interface "veth1.0"

Port "veth2.0"

tag: 10

Interface "veth2.0"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

#使用veth设备连接br-in和br-in2两个桥

[root@OS-network-1 ~]# ip link add vethb1 type veth peer name vethb2

[root@OS-network-1 ~]# ip link set vethb1 up

[root@OS-network-1 ~]# ip link set vethb2 up

[root@OS-network-1 ~]# ovs-vsctl add-port br-in vethb1

[root@OS-network-1 ~]# ovs-vsctl add-port br-in2 vethb2

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge "br-in2"

Port "br-in2"

Interface "br-in2"

type: internal

Port "vethb2"

Interface "vethb2"

Bridge br-in

Port "veth1.0"

tag: 10

Interface "veth1.0"

Port "veth2.0"

tag: 10

Interface "veth2.0"

Port "vethb1"

Interface "vethb1"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

说明:

使用veth设备连接两个ovs桥设备时。veth的两个port默认是vlan_mode为trunk模式。

创建虚拟机vm3和vm4:

[root@OS-network-1 ~]# cat /etc/qemu-ovs-ifup2

#!/bin/bash

bridge=br-in2

if [ -n "$1" ];then

ip link set $1 up

sleep 1

ovs-vsctl add-port $bridge $1 && exit 0 || exit 1

else

echo "Error: no port specified"

exit 2

fi

[root@OS-network-1 ~]# cat /etc/qemu-ovs-ifdown2

#!/bin/bash

bridge=br-in2

if [ -n "$1" ];then

ip link set $1 down

sleep 1

ovs-vsctl del-port $bridge $1 && exit 0 || exit 1

else

echo "Error: no port specified"

exit 2

fi

[root@OS-network-1 ~]# qemu-kvm -m 128 -smp 1 -name vm3 -drive file=/images/cirros/test3.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:03 -net tap,ifname=veth3.0,script=/etc/qemu-ovs-ifup2,downscript=/etc/qemu-ovs-ifdown2 -nographic

[root@OS-network-1 ~]# qemu-kvm -m 128 -smp 1 -name vm4 -drive file=/images/cirros/test4.qcow2,if=virtio,media=disk -net nic,macaddr=52:54:00:aa:bb:04 -net tap,ifname=veth4.0,script=/etc/qemu-ovs-ifup2,downscript=/etc/qemu-ovs-ifdown2 -nographic

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge "br-in2"

Port "br-in2"

Interface "br-in2"

type: internal

Port "vethb2"

Interface "vethb2"

Port "veth4.0"

Interface "veth4.0"

Port "veth3.0"

Interface "veth3.0"

Bridge br-in

Port "veth1.0"

Interface "veth1.0"

Port "veth2.0"

tag: 10

Interface "veth2.0"

Port "vethb1"

tag: 10

Interface "vethb1"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

#配置vm3的IP

# ip a add 192.168.1.4/24 dev eth0

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:03 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.4/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feaa:bb03/64 scope link

valid_lft forever preferred_lft forever

#配置vm4的IP

# ip a add 192.168.1.5/24 dev eth0

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:aa:bb:04 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.5/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:feaa:bb04/64 scope link

valid_lft forever preferred_lft forever

#vm3测试:ping vm1和vm4

# ping 192.168.1.2 -c1

PING 192.168.1.2 (192.168.1.2): 56 data bytes

--- 192.168.1.2 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss #不通

# ping 192.168.1.5 -c1

PING 192.168.1.5 (192.168.1.5): 56 data bytes

64 bytes from 192.168.1.5: seq=0 ttl=64 time=1.465 ms

--- 192.168.1.5 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss #通

round-trip min/avg/max = 1.465/1.465/1.465 ms

设置vm3的VLAN为10

[root@OS-network-1 ~]# ovs-vsctl set port veth3.0 tag=10

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge "br-in2"

Port "br-in2"

Interface "br-in2"

type: internal

Port "vethb2"

Interface "vethb2"

Port "veth4.0"

Interface "veth4.0"

Port "veth3.0"

tag: 10 #tag为10

Interface "veth3.0"

Bridge br-in

Port "veth1.0"

tag: 10

Interface "veth1.0"

Port "veth2.0"

tag: 10

Interface "veth2.0"

Port "vethb1"

Interface "vethb1"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

#vm3测试:ping vm1和vm4

# ping 192.168.1.2 -c1

PING 192.168.1.2 (192.168.1.2): 56 data bytes

64 bytes from 192.168.1.2: seq=0 ttl=64 time=8.894 ms

--- 192.168.1.2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss #通

round-trip min/avg/max = 8.894/8.894/8.894 ms

# ping 192.168.1.5 -c1

PING 192.168.1.5 (192.168.1.5): 56 data bytes

--- 192.168.1.5 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss #不通

设置vm4的VLAN为10

[root@OS-network-1 ~]# ovs-vsctl set port veth4.0 tag=10

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge "br-in2"

Port "br-in2"

Interface "br-in2"

type: internal

Port "vethb2"

Interface "vethb2"

Port "veth4.0"

tag: 10

Interface "veth4.0"

Port "veth3.0"

tag: 10

Interface "veth3.0"

Bridge br-in

Port "veth1.0"

tag: 10

Interface "veth1.0"

Port "veth2.0"

tag: 10

Interface "veth2.0"

Port "vethb1"

Interface "vethb1"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

# ping 192.168.1.2 -c1

PING 192.168.1.2 (192.168.1.2): 56 data bytes

64 bytes from 192.168.1.2: seq=0 ttl=64 time=8.894 ms

--- 192.168.1.2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss #通

round-trip min/avg/max = 8.894/8.894/8.894 ms

# ping 192.168.1.5 -c1

PING 192.168.1.5 (192.168.1.5): 56 data bytes

64 bytes from 192.168.1.5: seq=0 ttl=64 time=4.750 ms

--- 192.168.1.5 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss #通

round-trip min/avg/max = 4.750/4.750/4.750 ms

试验目标:vm1和vm3设置VLAN为20;vm2和vm4设置vlan为30;

设置VLAN:

[root@OS-network-1 ~]# ovs-vsctl set port veth1.0 tag=20

[root@OS-network-1 ~]# ovs-vsctl set port veth2.0 tag=30

[root@OS-network-1 ~]# ovs-vsctl set port veth3.0 tag=20

[root@OS-network-1 ~]# ovs-vsctl set port veth4.0 tag=30

[root@OS-network-1 ~]# ovs-vsctl show

0c5ffa9a-f512-48af-9885-a35bf745f9a4

Bridge "br-in2"

Port "br-in2"

Interface "br-in2"

type: internal

Port "vethb2"

Interface "vethb2"

Port "veth4.0"

tag: 30

Interface "veth4.0"

Port "veth3.0"

tag: 20

Interface "veth3.0"

Bridge br-in

Port "veth1.0"

tag: 20

Interface "veth1.0"

Port "veth2.0"

tag: 30

Interface "veth2.0"

Port "vethb1"

Interface "vethb1"

Port br-in

Interface br-in

type: internal

ovs_version: "2.11.0"

测试同VLAN和不同vlan间的连通性:

#vm1上ping vm2\vm3\vm4

# ping 192.168.1.3 -c1

PING 192.168.1.3 (192.168.1.3): 56 data bytes

--- 192.168.1.3 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss

#

# ping 192.168.1.4 -c1

PING 192.168.1.4 (192.168.1.4): 56 data bytes

64 bytes from 192.168.1.4: seq=0 ttl=64 time=5.047 ms

--- 192.168.1.4 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 5.047/5.047/5.047 ms

# ping 192.168.1.5 -c1

PING 192.168.1.5 (192.168.1.5): 56 data bytes

--- 192.168.1.5 ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss