03 Open Stack Rocky手动部署

网卡配置:network节点也使用controller

Controller eth0 10.211.55.46 #管理网

eth1 none # provider接口,不分配IP

eth2 10.37.132.12

Compute eth0 10.211.55.47 #管理网

eth1 none

eth2 10.37.132.13

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO="none"

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=eth1

DEVICE=eth1

ONBOOT=yes

systemctl disable NetworkManager

systemctl stop NetworkManager

systemctl disable firewalld.service

systemctl stop firewalld.service

setenforce 0

cat > /etc/yum.repos.d/openstack-rocky.repo <<EOF

[openstack]

name=opentack

baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-rocky/

gpgcheck=0

[Virt]

name=CentOS-$releasever - Base

baseurl=https://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

EOF

yum install -y python-openstackclient

https://docs.openstack.org/install-guide/

yum install -y mariadb mariadb-server python2-PyMySQL

cat >/etc/my.cnf.d/openstack.cnf <<EOF

[mysqld]

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF

systemctl enable mariadb.service

systemctl start mariadb.service

#安全初始化

mysql_secure_installation

yum install -y rabbitmq-server

systemctl enable rabbitmq-server.service

systemctl start rabbitmq-server.service

#创建用户:openstack,密码:RABBIT_PASS

rabbitmqctl add_user openstack RABBIT_PASS

# 配置openstack用户的权限:允许配置、读、写权限

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

yum install memcached python-memcached -y

#修改配置文件:/etc/sysconfig/memcached中的OPTIONS选项

OPTIONS="-l 127.0.0.1,::1,controller"

systemctl enable memcached.service

systemctl start memcached.service

Keystone的两个主要功能分别是用户管理和服务管理,要理解 Keystone 如何管理用户,则需要首先理解Keystone 中用户管理的几个术语:

User (用户):User是一个使用OpenStack 云服务的人、系统或者服务的字符称号,Keystone 会对用户发起的资源调用请求进行验证,验证通过的用户可以登录OpenStack 云平台并且通过Keystone 颁发的 Token去访问资源,用户可以被分配到一个或者多个tenant/project 中。

Credential (用户凭据):Credential是用来证明用户身份的数据,可以是用户名和密码、用户名和API key,或者是 Keystone认证后分配的 Token

Authentication (身份认证):Authentication是验证用户身份的一个过程。Keystone服务通过检查用户的Credential来确定用户的身份,在第一次对用户进行认证时,用户使用用户名/密码或者用户名/API key作为 Credential,而当用户的 Credential被验证后,Keystone会给用户分配一个Authentication Token供该用户后续的请求使用。

Token(令牌):Token是一个Keystone分配的用于访问OpenStack API和资源服务的字符文本字串。一个用户的Token可能在任何时间被撤销(revoke),即用户的Token是有时间限制的,在OpenStack 中, Token是和特定的Tenant绑定的,因此如果用户属多个Tenant,则会有多个Token。

Tenant(租户):Tenant是对资源进行分组或者隔离的容器(有时也称为Project),一个Tenant可能对应一个云服务客户、一个服务账号、一个组织或者一个项目。在OpenStack 中,用户可以属于多个Tenant,并且必须至少属于某个Tenant。Tenant中可使用资源的限制称为 Tenant Quotas,它包括该 Tenant内可使用的各种资源限额,如CPU限额、内存限额等。

Service (服务):Service是一个OpenStack提供的服务,比如Nova、Swift或者Glance等,在OpenStack 中,每个服务提供一个或者多个Endpoint以供不同角色的用户进行资源访问和操作。

Endpoint(服务人口):Endpoint是一个Service监听服务请求的网络地址,客户端要访问某个Service,则必须通过该Service提供的Endpoint, Endpoint 通常是个可访问的URL地址,在 OpenStack的多Region部署架构中,不同 Region 中相同的 Service 也具有不同的Endpoint。在 OpenStack服务框架中,Service之间的相互访问也需要通过服务的Endpoint才可访问对应的目标 Service,例如当Nova服务需要访问Glance服务以获取Image时,Nova 就需要首先访问Keystone,从Keystone的服务列表中拿到Glance的 Endpoint,然后通过访问该Endpoint以获取Glance 服务。每个服务的Endpoint 都具有 Region属性,具有不同 Region属性值的Endpoint也是不相同的。通常,一个服务会提供三类 Endpoint 供客户端使用:属性为adminurl的 Endpoint 只能被具有admin角色的用户访问;属性为internalurl的 Endpoint被 OpenStack 内部服务用以彼此之间的服务通信;属性为 publicurl的Endpoint 用于其他用户的访问。

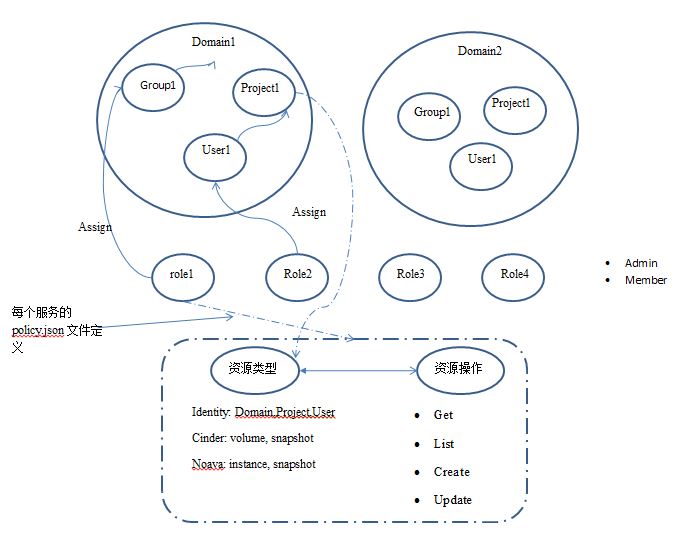

Role (角色): Role可以看成是一个访问控制列表(ACL)的集合。在Keystone的认证机制中,分配给用户的Token中包含了用户的角色列表。被用户访问的服务会解析用户角色列表中的角色所能进行的操作和可以访问的资源,在Keystone中,系统默认使用admin和_member_role角色。

Policy(策略):Keystone 对用户的验证除了包含对用户的身份进行验证,还需要鉴别用户对某个服务是否有访问权限(根据 Role判断)。Policy机制就是用来控制某一个Tenant中的某个User 是否具有某个操作的权限。这个与相关的Role关联的User能执行什么操作,不能执行什么操作,就是通过 Policy 机制来实现的。对于Keystone服务来说,Policy 就是一个JSON 格式的文件,默认位置是/etc/keystone/policy.json。通过配置这个文件,Keystone认证服务实现了基于用户角色的权限管理。

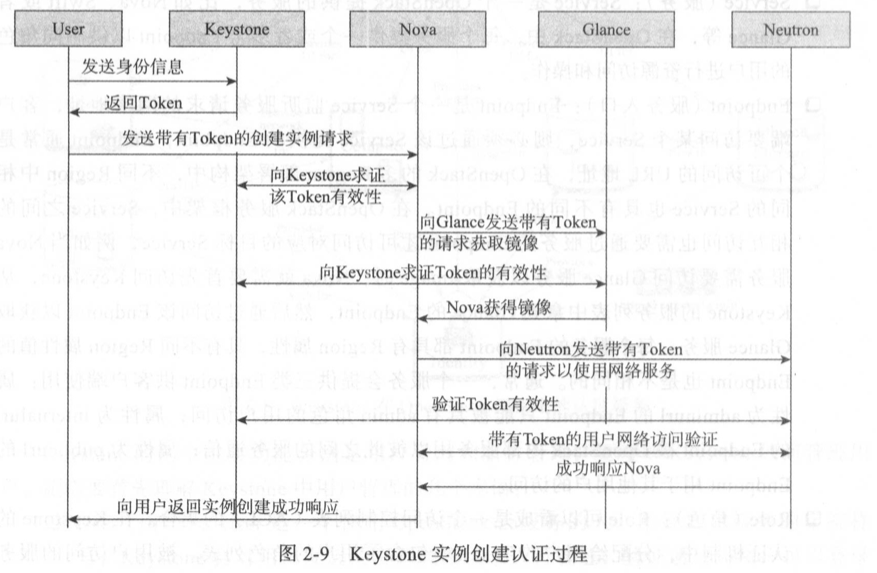

在 Keystone中,不仅只是用户与Keystone 发生认证机制,OpenStack 内部各服务之间的通信也要 Keystone的参与。以用户创建实例虚拟机为例,用户首先向 Keystone 发送如用户密码之类的身份信息,Keystone验证成功后向用户配发 Token,之后用户向 Nova 发出带有Token的实例创建请求,Nova 接收到请求后向 Keystone验证Token的有效性,Token被证实有效后,由Nova向Glance服务发出带有Token的镜像传输请求,Glance同样要到Keystone去验证Token的有效性,被证实有效后Glance向 Nova 正式提供镜像目录查询和传递服务,Nova 获取镜像后继续向 Neutron 发送带有Token的网络创建服务,再由 Neutron向 Keystone求证Token的有效性,Token被证实有效后,Neutron 允许Nova使用网络服务,Nova启动虚拟机成功,同时向用户返回创建实例成功的通知,下图为用户创建实例过程中 Keystone的响应流程。

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

yum install -y openstack-keystone httpd mod_wsgi

- 在 [database] 部分,配置数据库访问

[database]

# ...

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

- 在 [token] 部分,配置令牌提供程序为 Fernet

[token]

# ...

provider = fernet

su -s /bin/sh -c "keystone-manage db_sync" keystone

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

# 初始化admin用户(管理用户)与密码,3种api端点,服务实体region等

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne #指定默认区域,该选项会生成指定的默认region

- /etc/httpd/conf/httpd.conf

#配置 ServerName 选项

ServerName controller

- 创建软连接(或拷贝)

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

- 启动httpd

systemctl enable httpd.service

systemctl start httpd.service

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

#测试

[root@controller ~]# openstack domain list

+---------+---------+---------+--------------------+

| ID | Name | Enabled | Description |

+---------+---------+---------+--------------------+

| default | Default | True | The default domain |

+---------+---------+---------+--------------------+

domain中包含:user、group、project

role:全局资源

- 创建domain(可选,只用于演示)

[root@controller ~]# openstack domain create --description "An Example Domain" ljz

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | 82c1257b8382447abe2e8da63ddf2659 |

| name | ljz |

| tags | [] |

+-------------+----------------------------------+

- 创建一个特殊的project:service,用于在其内部创建OpenStack各个服务(image,volume,compute…)的账号

[root@controller ~]# openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | a31b3b986b0e403e9528522290b9d221 |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

- 创建非特权project、user、role(可选,只用于演示)

# 创建project:myproject

[root@controller ~]# openstack project create --domain default --description "Demo Project" myproject

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | a5c4f46fc9864592938407f66397e873 |

| is_domain | False |

| name | myproject |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

#创建user:myuser

[root@controller ~]# openstack user create --domain default --password ljzpasswd myuser

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 23012a8d4ac94e8783249eca3eef1e45 |

| name | myuser |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 创建role:myrole

[root@controller ~]# openstack role create myrole

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | d4899692ea0a4fa29ed78e0fdef073cf |

| name | myrole |

+-----------+----------------------------------+

#使用 myrole 角色将 myproject 项目和 myuser 用户关联在一起??

[root@controller ~]# openstack role add --project myproject --user myuser myrole

查看:

[root@controller ~]# openstack domain list

+----------------------------------+---------+---------+--------------------+

| ID | Name | Enabled | Description |

+----------------------------------+---------+---------+--------------------+

| 82c1257b8382447abe2e8da63ddf2659 | ljz | True | An Example Domain |

| default | Default | True | The default domain |

+----------------------------------+---------+---------+--------------------+

[root@controller ~]# openstack project list

+----------------------------------+-----------+

| ID | Name |

+----------------------------------+-----------+

| 0a2f80a8988b438ea6d35b4fd6ec62fc | admin |

| a31b3b986b0e403e9528522290b9d221 | service |

| a5c4f46fc9864592938407f66397e873 | myproject |

+----------------------------------+-----------+

[root@controller ~]# openstack user list

+----------------------------------+--------+

| ID | Name |

+----------------------------------+--------+

| 23012a8d4ac94e8783249eca3eef1e45 | myuser |

| 9a3739a6bc72427e8404eef7f7b7af56 | admin |

+----------------------------------+--------+

[root@controller ~]# openstack role list

+----------------------------------+--------+

| ID | Name |

+----------------------------------+--------+

| 127e836628934a97b383e885b8c27a00 | member |

| 3be495cbe43947989c12b86f4e251f6f | reader |

| 90569dd30c354530b23f2f66a582652f | admin |

| d4899692ea0a4fa29ed78e0fdef073cf | myrole |

+----------------------------------+--------+

[root@controller ~]# openstack role show myrole

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | d4899692ea0a4fa29ed78e0fdef073cf |

| name | myrole |

+-----------+----------------------------------+

openstack的各组件日志存放在 /var/log/NAME/目录下

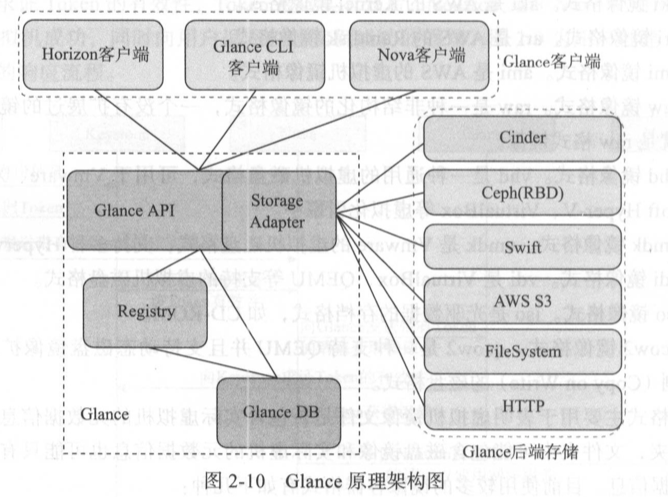

Glance 主要负责Image的注册和查询传送服务,Glance 中的镜像可以是用户制作并上传的镜像,也可以是对当前实例进行快照形式copy后的镜像副本,两种类型的镜像都可以快速用于实例部署。通过Glance提供的标准 RESTful API接口, Glance存储的镜像可以供OpenStack 用户和管理员的多台服务器进行并行查询和访问,默认情况下, Glance 将用户上传的镜像存储在部署Glance服务的主机目录上(/var/lib/glance/images)。Glance API服务可以通过配置缓存机制(memcached)来提高镜像服务的响应速度,Glance 支持多种后端存储服务,例如本地文件系统、Swift、AWS S3兼容的对象存储、 Ceph等。

OpenStack 的镜像服务主要由 Glance-api 和 Glance-registry 两个服务构成。目前Glance中的镜像数据分为两部分存放,Image的元数据通过Glance-registry存放在数据库中,而Image 的 Chunk数据则通过Glance-store存放在各种 Backend Store (后端存储)中,并从中获取。

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

# 创建glance用户

openstack user create --domain default --password GLANCE_PASS glance

# 将admin角色添加到glance用户和service项目(为用户glance授予对service项目的admin权限)

openstack role add --project service --user glance admin

# 创建glance服务实体

openstack service create --name glance --description "OpenStack Image" image

# 创建image服务的endpoints(glance-api访问地址)

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

yum install -y openstack-glance

- 在 [database] 部分,配置数据库访问:

[database]

# ...

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

- 在 [keystone_authtoken] 和 [paste_deploy] 部分,配置 Identity 服务访问权限

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default #project所在domain

user_domain_name = Default #user所在domain

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

# ...

flavor = keystone

- 在 [glance_store] 部分,配置本地文件系统存储image的路径

[glance_store]

# ...

stores = file,http #启用文件和http2种存储image的方式,http只能读取,不能上传

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

- 在 [database] 部分,配置数据库访问

[database]

# ...

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

- 在 [keystone_authtoken] 和 [paste_deploy] 部分,配置 Identity 服务访问权限

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

# ...

flavor = keystone

su -s /bin/sh -c "glance-manage db_sync" glance

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service

# 下载镜像文件

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

# 上传镜像

openstack image create "cirros" \

--file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

# 查看镜像

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 671fb349-5ae7-480c-94d3-28d0a0bdace7 | cirros | active |

+--------------------------------------+--------+--------+

当前OpenStack的镜像服务和其他项目还不支持容器格式,因此,在不确定的情况下,最好将镜像的容器设置为bare格式。表示镜像没有 Container或者没有包含元数据信息。

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';

# 创建glance用户

openstack user create --domain default --password NOVA_PASS nova

# 将admin角色添加到nova用户和service项目

openstack role add --project service --user nova admin

# 创建compute服务实体

openstack service create --name nova --description "OpenStack Compute" compute

# 创建image服务的endpoints

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1

# 创建Placement服务的用户:placement

openstack user create --domain default --password PLACEMENT_PASS placement

# 将admin角色添加到placement用户和service项目

openstack role add --project service --user placement admin

# 创建compute服务实体

openstack service create --name nova --description "OpenStack Compute" placement

# 创建placement服务的endpoints(glance-api访问地址)

openstack endpoint create --region RegionOne \

placement public http://controller:8778

openstack endpoint create --region RegionOne \

placement internal http://controller:8778

openstack endpoint create --region RegionOne \

placement admin http://controller:8778

yum install -y openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api

- 在 [DEFAULT] 部分中,仅启用计算和元数据 API

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

- 在 [api_database]、[database] 和 [placement_database] 部分,配置数据库访问

一个资源提供者可以是一个计算节点,共享存储池,或一个IP分配池。placement服务跟踪每个供应商的库存和使用情况。例如,在一个计算节点创建一个实例的可消费资源如计算节点的资源提供者的CPU和内存,磁盘从外部共享存储池资源提供商和IP地址从外部IP资源提供者。

[api_database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[placement_database]

# ...

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

- 在[DEFAULT]部分,配置RabbitMQ消息队列访问

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

- 在 [api] 和 [keystone_authtoken] 部分,配置 Identity 服务访问

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

- 在 [DEFAULT] 部分,配置 my_ip 选项,取值为控制器节点的管理接口 IP 地址

[DEFAULT]

# ...

my_ip = 10.211.55.46

- 在 [DEFAULT] 部分,启用对网络服务的支持

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver #禁用nova防火墙,而采用neutron的防火墙

默认情况下,Compute 使用内部防火墙驱动程序。由于网络服务包含防火墙驱动程序,您必须使用 nova.virt.firewall.NoopFirewallDriver 防火墙驱动程序禁用计算防火墙驱动程序。

- 在[vnc]部分,配置VNC代理,使用控制器节点的管理接口IP地址

[vnc]

enabled = true

# ...

server_listen = $my_ip

server_proxyclient_address = $my_ip

- 在 [glance] 部分,配置 Image 服务 API 的位置

[glance]

# ...

api_servers = http://controller:9292

- 在 [oslo_concurrency] 部分,配置锁路径

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

- 在 [placement] 部分,配置 Placement API:

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

- 由于openstack-nova-placement-api-15.0.0-1.el7.noarch的bug,您必须通过将以下配置添加到 /etc/httpd/conf.d/00-nova-placement-api.conf 来启用对 Placement API 的访问:

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

# 修改后

[root@controller ~]# cat /etc/httpd/conf.d/00-nova-placement-api.conf

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova group=nova

WSGIScriptAlias / /usr/bin/nova-placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/nova/nova-placement-api.log

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

</VirtualHost>

Alias /nova-placement-api /usr/bin/nova-placement-api

<Location /nova-placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

systemctl restart httpd

# nova-api and placement

su -s /bin/sh -c "nova-manage api_db sync" nova

# cell0

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

# cell1

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

# nova

su -s /bin/sh -c "nova-manage db sync" nova

# 验证 nova cell0 和 cell1 是否正确注册

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| 名称 | UUID | Transport URL | 数据库连接 | Disabled |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | 24584ed4-4422-4baf-b0e4-235c29442753 | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

nova-consoleauth 自 18.0.0 (Rocky) 起已弃用,并将在即将发布的版本中删除。应为每个单元部署控制台代理。如果执行全新安装(不是升级),那么您可能不需要安装 nova-consoleauth 服务。有关详细信息,请参阅 workarounds.enable_consoleauth。

在compute节点操作

yum install -y libvirt

yum install -y openstack-nova-compute

- 在 [DEFAULT] 部分中,仅启用计算和元数据 API

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

- 在[DEFAULT]部分,配置RabbitMQ消息队列访问

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

- 在 [api] 和 [keystone_authtoken] 部分,配置 Identity 服务访问

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

- 在 [DEFAULT] 部分,配置 my_ip 选项

[DEFAULT]

# ...

my_ip = 10.211.55.47 # MANAGEMENT_INTERFACE_IP_ADDRESS

- 在 [DEFAULT] 部分,启用对网络服务的支持

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

- 在 [vnc] 部分,启用和配置远程控制台访问

[vnc]

# ...

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

- 在 [glance] 部分,配置 Image 服务 API 的位置

[glance]

# ...

api_servers = http://controller:9292

- 在 [oslo_concurrency] 部分,配置锁路径

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

- 在 [placement] 部分,配置 Placement API

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

在controller节点执行

[root@controller ~]# openstack compute service list --service nova-compute

+----+--------------+---------------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+---------------------+------+---------+-------+----------------------------+

| 9 | nova-compute | openstack-compute01 | nova | enabled | up | 2021-06-24T11:15:27.000000 |

+----+--------------+---------------------+------+---------+-------+----------------------------+

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 24584ed4-4422-4baf-b0e4-235c29442753

Checking host mapping for compute host 'openstack-compute01': b3859d81-d3a8-4e9b-a553-3bfd2919b9ac

Creating host mapping for compute host 'openstack-compute01': b3859d81-d3a8-4e9b-a553-3bfd2919b9ac

Found 1 unmapped computes in cell: 24584ed4-4422-4baf-b0e4-235c29442753

添加新计算节点时,必须在控制器节点上运行 nova-manage cell_v2 discover_hosts 以注册这些新计算节点。或者,您可以在 /etc/nova/nova.conf 中设置适当的间隔:

[scheduler] discover_hosts_in_cells_interval = 300

[root@controller ~]# openstack compute service list

+----+------------------+---------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+---------------------+----------+---------+-------+----------------------------+

| 1 | nova-consoleauth | controller | internal | enabled | up | 2021-06-24T11:16:52.000000 |

| 2 | nova-scheduler | controller | internal | enabled | up | 2021-06-24T11:16:51.000000 |

| 4 | nova-conductor | controller | internal | enabled | up | 2021-06-24T11:16:52.000000 |

| 9 | nova-compute | openstack-compute01 | nova | enabled | up | 2021-06-24T11:16:57.000000 |

+----+------------------+---------------------+----------+---------+-------+----------------------------+

[root@controller ~]# openstack catalog list

+----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+----------+-----------+-----------------------------------------+

| glance | image | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | |

| nova | compute | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | |

| nova | placement | RegionOne |

| | | internal: http://controller:8778 |

| | | RegionOne |

| | | public: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | RegionOne |

| | | admin: http://controller:5000/v3/ |

| | | |

+----------+-----------+-----------------------------------------+

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 671fb349-5ae7-480c-94d3-28d0a0bdace7 | cirros | active |

+--------------------------------------+--------+--------+

[root@controller ~]# nova-status upgrade check

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Resource Providers |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: API Service Version |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Request Spec Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Console Auths |

| Result: Success |

| Details: None |

+--------------------------------+

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

# 创建neutron用户

openstack user create --domain default --password NEUTRON_PASS neutron

# 将admin角色添加到neutron用户和service项目

openstack role add --project service --user neutron admin

# 创建neutron服务实体

openstack service create --name neutron --description "OpenStack Networking" network

# 创建netwroking服务的endpoints

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696

在network节点执行,本文档中network节点与controller节点合二为一,所以在controller节点执行。

yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

- 在 [database] 部分,配置数据库访问:

[database]

# ...

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

- 在 [DEFAULT] 部分,启用ML2(Modular Layer 2)插件、路由器服务和重叠 IP 地址:

[DEFAULT]

# ...

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

- 在 [DEFAULT] 部分,配置 RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

- 在 [DEFAULT] 和 [keystone_authtoken] 部分,配置身份服务访问:

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

- 在 [DEFAULT] 和 [nova] 部分,配置 Networking 以通知 Compute 网络拓扑变化:

[DEFAULT]

# ...

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

# ...

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

- 在 [oslo_concurrency] 部分,配置锁路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

ML2插件使用 Linux Bridge机制为实例构建第 2 层(桥接和交换)虚拟网络基础设施。

- 在 [ml2] 部分,启用平面、VLAN 和 VXLAN 网络:

[ml2]

# ...

type_drivers = flat,vlan,vxlan

- 在 [ml2] 部分,启用 VXLAN 自助服务网络:

[ml2]

# ...

tenant_network_types = vxlan

- 在 [ml2] 部分,启用 Linux 桥接和第 2 层填充机制:

[ml2]

# ...

mechanism_drivers = linuxbridge,l2population

配置 ML2 插件后,删除 type_drivers 选项中的值可能会导致数据库不一致。

- 在 [ml2] 部分,启用端口安全扩展驱动程序:

[ml2]

# ...

extension_drivers = port_security

- 在 [ml2_type_flat] 部分,将平面网络虚拟网络配置为提供者:

[ml2_type_flat]

# ...

flat_networks = provider

- 在 [ml2_type_vxlan] 部分,为自助服务网络配置 VXLAN 网络标识符范围:

[ml2_type_vxlan]

# ...

vni_ranges = 1:1000

- 在 [securitygroup] 部分,启用 ipset 以提高安全组规则的效率:

[securitygroup]

# ...

enable_ipset = true

Linux桥接代理为实例构建第 2 层(桥接和交换)虚拟网络基础架构并处理安全组。

- 在 [linux_bridge] 部分,将提供者虚拟网络映射到提供者物理网络接口:

[linux_bridge]

physical_interface_mappings = provider:eth2

该网卡就是事先准备的没有配置IP的网卡。

- 在 [vxlan] 部分,启用 VXLAN 覆盖网络,配置处理覆盖网络的物理网络接口的 IP 地址,并启用第 2 层填充:

[vxlan]

enable_vxlan = true

local_ip = 10.37.132.12

l2_population = true

- 在 [securitygroup] 部分,启用安全组并配置 Linux 网桥 iptables 防火墙驱动程序:

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

- 通过验证以下所有 sysctl 值都设置为 1,确保您的 Linux 操作系统内核支持网桥过滤器:

net.bridge.bridge-nf-call-iptables

net.bridge.bridge-nf-call-ip6tables

# sysctl -a |grep -E 'net.bridge.bridge-nf-call-iptables|net.bridge.bridge-nf-call-ip6tables'

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

第 3 层 (L3) 代理为自助服务虚拟网络提供路由和 NAT 服务。

- 在[DEFAULT]部分,配置Linux网桥接口驱动和外网网桥:

[DEFAULT]

# ...

interface_driver = linuxbridge

DHCP 代理为虚拟网络提供 DHCP 服务。

- 在 [DEFAULT] 部分,配置 Linux 桥接接口驱动程序、Dnsmasq DHCP 驱动程序,并启用隔离元数据,以便提供商网络上的实例可以通过网络访问元数据:

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

元数据代理向实例提供配置信息,例如凭据。

- 在 [DEFAULT] 部分,配置元数据主机和共享密钥:

[DEFAULT]

# ...

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

- 在 [neutron] 部分,配置访问参数,启用元数据代理,并配置密钥:

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl enable neutron-l3-agent.service

systemctl start neutron-l3-agent.service

systemctl enable neutron-linuxbridge-agent

systemctl start neutron-linuxbridge-agent

计算节点处理实例的连接和安全组。

yum install -y openstack-neutron-linuxbridge ebtables ipset

- 在 [database] 部分,注释掉所有连接选项,因为计算节点不直接访问数据库。

- 在 [DEFAULT] 部分,配置 RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

- 在 [DEFAULT] 和 [keystone_authtoken] 部分,配置身份服务访问:

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

- 在 [oslo_concurrency] 部分,配置锁路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

- 在 [linux_bridge] 部分,将提供者虚拟网络映射到提供者物理网络接口:

[linux_bridge]

physical_interface_mappings = provider:eth2

- 在 [vxlan] 部分,启用 VXLAN 覆盖网络,配置处理覆盖网络的物理网络接口的 IP 地址,并启用第 2 层填充:

[vxlan]

enable_vxlan = true

local_ip = 10.37.132.13

l2_population = true

- 在 [securitygroup] 部分,启用安全组并配置 Linux 网桥 iptables 防火墙驱动程序:

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

- 通过验证以下所有 sysctl 值都设置为 1,确保您的 Linux 操作系统内核支持网桥过滤器:

net.bridge.bridge-nf-call-iptables

net.bridge.bridge-nf-call-ip6tables

# sysctl -a |grep -E 'net.bridge.bridge-nf-call-iptables|net.bridge.bridge-nf-call-ip6tables'

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

报错:sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

解决:modprobe br_netfilter

永久生效:

cat > /etc/sysconfig/modules/br_netfilter.modules «EOF #!/bin/bash /sbin/modinfo -F filename br_netfilter > /dev/null 2>&1 if [ $? -eq 0 ]; then /sbin/modprobe br_netfilter fi EOF chmod 755 /etc/sysconfig/modules/br_netfilter.modules

- 在 [neutron] 部分,配置访问参数:

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

systemctl restart openstack-nova-compute.service

systemctl enable neutron-linuxbridge-agent.service

systemctl start neutron-linuxbridge-agent.service

yum install -y openstack-dashboard

- 配置仪表板以在controller节点上使用 OpenStack 服务:

OPENSTACK_HOST = "controller"

- 允许您的主机访问仪表板:

#ALLOWED_HOSTS = ['one.example.com', 'two.example.com']

ALLOWED_HOSTS = [‘*’]

- 配置 memcached 会话存储服务:

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

- 启用身份 API 版本 3:

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

- 启用对域的支持:

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

- 配置 API 版本:

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

- 将 Default 配置为您通过仪表板创建的用户的默认域:

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

- 将用户配置为您通过仪表板创建的用户的默认角色:

#OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "_member_"

- (可选)配置时区:

TIME_ZONE = "Asia/Shanghai"

如果未包含,添加以下行。

WSGIApplicationGroup %{GLOBAL}

systemctl restart httpd.service memcached.service

访问:http://controller/dashboard.

用户名:admin/ADMIN_PASS

domain:default